What Exactly is The Singularity

The technological Singularity (often called the singularity for short) is a term that signifies a potential future where artificial intelligence surpasses human intelligence. After that point in time, civilization would radically change into a future that no human could comprehend. The idea of the singularity was first written about around 1950 by John von Neumann, one of the founders of computers, phycisist, and a polymath. He was paraphrased saying: “One conversation centered on the ever-accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.”

Later in 1993, a computer scientist and science fiction author, Vernor Vinge popularized the idea of the singularity with a paper titled “The Coming Technological Singularity: How to Survive in the Post-Human Era”. In the paper he discusses aritifial intelligence with exponetial growth,technological unemployment, human extinction, intelligence applification for humans, and the unpreventability of the singularity. He really brought to center the idea of a doomsday scenario following the singularity where computers don’t need or want humans around and this idea has now entered the mainstream consciousness. In his paper he writes: “What are the consequences of this event? When greater-than-human intelligence drives progress, that progress will be much more rapid. In fact, there seems no reason why progress itself would not involve the creation of still more intelligent entities – on a still-shorter time scale. The best analogy that I see is with the evolutionary past: Animals can adapt to problems and make inventions, but often no faster than natural selection can do its work – the world acts as its own simulator in the case of natural selection. We humans have the ability to internalize the world and conduct “what if’s” in our heads; we can solve many problems thousands of times faster than natural selection. Now, by creating the means to execute those simulations at much higher speeds, we are entering a regime as radically different from our human past as we humans are from the lower animals.” Vernor believes that this shift would drammatically change the time scales of our future: “From the human point of view this change will be a throwing away of all the previous rules, perhaps in the blink of an eye, an exponential runaway beyond any hope of control. Developments that before were thought might only happen in “a million years” (if ever) will likely happen in the next century.” Vernor argues that if the singularity is even possible, the singularity cannot be avoided. That is because the lure of power it offers; competitive advantages in economics, military, and technology is so compelling that even if governments where to forbid these developments, it would just means someone else will get to it first. Further in his paper he talks about an alterantive path to the singularity called Intelligence Amplification (IA). This is scenario is a lot more safer for humans. Instead of independent computers evolving, humans are modified and amplified with computers to allow us to improve our memory retention, information processing, and other biological processes. These computer interfaces would act as subfunctions of human processes instead of running fully independently. Instead of trying to replicate human intelligence, researchers could focus on building computer systems that support human biological functions that we have already been studying and have some basic knowledge of. This would require much further advancement in our understanding of human biology, especially the brain. We would also need to accelerate advancements in human computer interfaces (HCI) to allow these technologies to properly interact with our bodies.

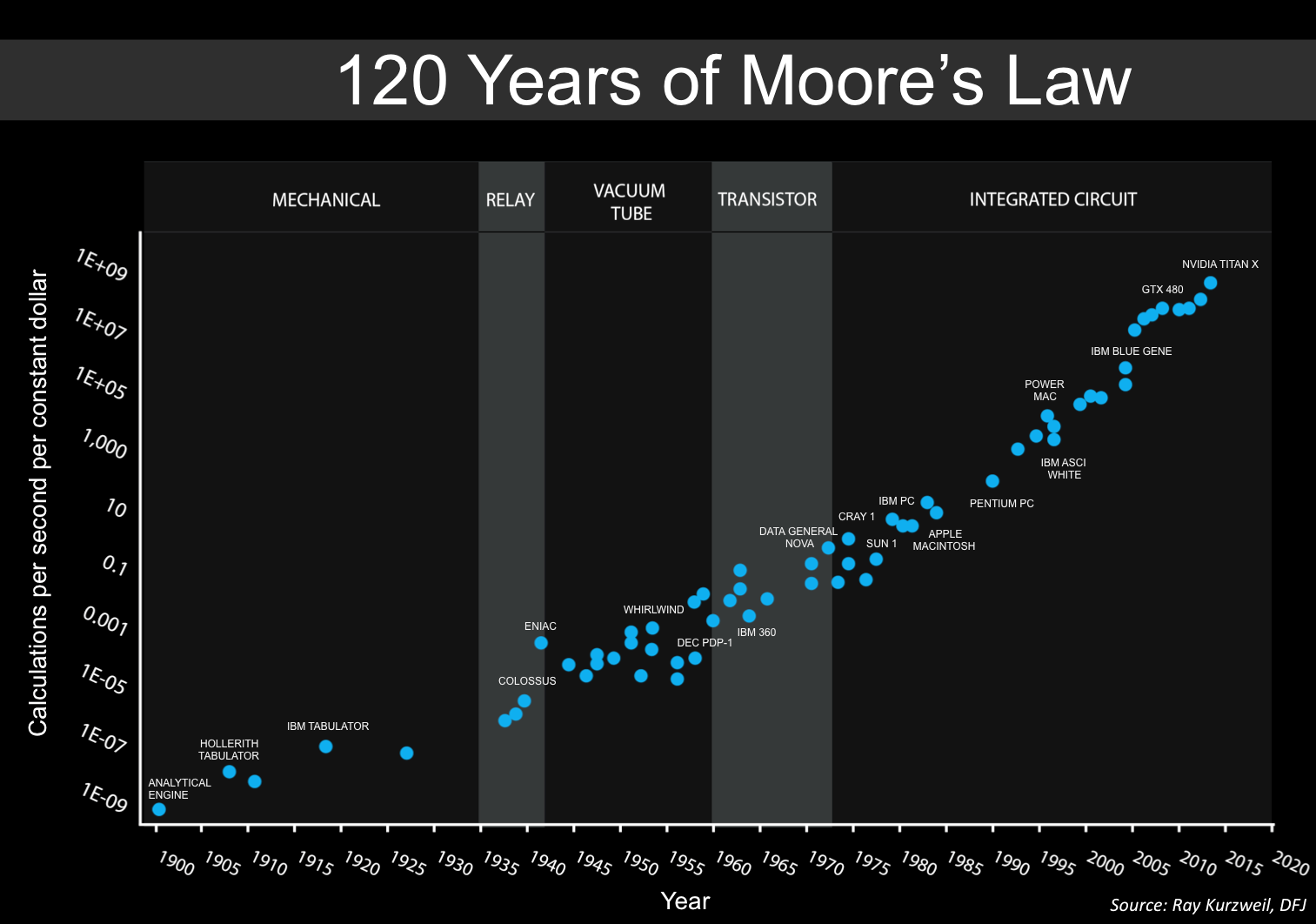

In 2005, Ray Kurzweil, a computer researcher, inventor, and technologist,wrote a book “The Singularity is Near”. In the book Ray tries to paint a picture of evidence showing that technology and artificial intelligence is growing at an exponetial rate and that our computer technology is just at the start of that growth curve. The result of all this growth will create something unrecognizable to anything we have ever seen. Ray talks about the possibillity of AI’s that merge with humans and nanotechnology that could transform us into countlss untold ways. He walks through charts and examples showing this growth trajectory which cumlimanates in his prediction of the Singuarity occuring around 2045. He believes that this technological Singularity will allow humans to achieve immortality, whether digitally(consciousness uploads) or physically (biological life extensions). Many of the arguments Ray make sense, but if you dig deeper into some of it, there are glaring holes.

With all these ideas of doomsday AI scenarios and potential immortality, the idea of the singularity has enterted into the mainstream zeitgeist. Countless movies and tv shows have been created showing what could potentially happen at the point when AIs become super intelligent. The Terminator series, West World, Avengers: Age of Ultron, Transcendence, 2001: A Space Odyssey, Her,Blade Runner,Ghost In The Shell, The Matrix, and so many more. Almost all of those movies end up with humans attacked, killed, and at the bottom of food chain. Her is the main exception from the list, the AIs become super intelligent and find that the humans are not smart enough and boring and so they all leave to another place (perhaps another dimesion) where humans can’t reach.

Some scientists, such as Stuart Russell and Stephen Hawking, have expressed concerns that if an advanced AI someday gains the ability to re-design itself at an ever-increasing rate, an unstoppable “intelligence explosion” could lead to human extinction. An AI’s brain would be a computer and not biological, and so would not have the limits of humans biological brains to grow and replicate. Humans are currently the smartest species known to exist and look how we treat other species below us: we eat them, hunt them for sport, use them as tools, or just plain try to kill them if they annoy us. If a computer would become smarter than us and could make its own decisions, there is no telling on what it would do. It could theoritically kill us because we are annoying or they could use as energy like batteries.

On the other side of the spectrum, some people thing we would end up in a much better situation where instead the computers become partners with us, merge with us, or just continue to be our subordinates and do what we tell them to. Computers would be intelligent, but for some reason may not have the same propencity for war and destruction as humans, and so may be fine working with humans. Image having computer doctors that could see our diagnosis and instantly recognize any ailment and have a customized cure for us. Imagine having a computer parner that you could ask any question to any problem and have it instantly solved in the most efficient way possible. Our world would have millions of Einstiens, geniuses that could invent new technologies for us nonstop. In this future, many people invision us accelerating our own biology to cure disease and aging, advancing our technology, and bringing a better future closer to us.

We have no way to know what that future would look like, but its not stopping people from trying to redirect us from hypothetical doomsday scenarios. Scientists,researchers, and futurist are trying to set humanity on a correction course by building ethical standards for the research and creation of AIs. The Machine Intelligence Research Institute (MIRI) who does AI research does AI research and states: “Given how disruptive domain-general AI could be, we think it is prudent to begin a conversation about this now, and to investigate whether there are limited areas in which we can predict and shape this technology’s societal impact….There are fewer obvious constraints on the harm a system with poorly specified goals might do. In particular, an autonomous system that learns about human goals, but is not correctly designed to align its own goals to its best model of human goals, could cause catastrophic harm in the absence of adequate checks.” Ethical AI is another group trying to help redirect the development of AI: “The current and rapid rise of AI presents numerous challenges and opportunities for humankind. Gartner predicts that 85% of all AI projects in the next two years will have erroneous outcomes. Adopting an ethical approach to the development and use of AI works to ensure organisations, leaders, and developers are aware of the potential dangers of AI and by integrating ethical principles into the design, development and deployment of AI, seek to avoid any potential harm.” The Future of Life Institute states: “Artificial intelligence (AI) has long been associated with science fiction, but it’s a field that’s made significant strides in recent years. As with biotechnology, there is great opportunity to improve lives with AI, but if the technology is not developed safely, there is also the chance that someone could accidentally or intentionally unleash an AI system that ultimately causes the elimination of humanity.” The Institute of Electrical and Electronics Engineers (IEEE) has a subgroup called The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems that has the mission of “Ensuring every stakeholder involved in the design and development of autonomous and intelligent systems is educated, trained, and empowered to prioritize ethical considerations so that these technologies are advanced for the benefit of humanity.”

The Algorithmic Justice League is trying to create equitable and accountable AI: “The Algorithmic Justice League’s mission is to raise awareness about the impacts of AI, equip advocates with empirical research, build the voice and choice of the most impacted communities, and galvanize researchers, policy makers, and industry practitioners to mitigate AI harms and biases. We’re building a movement to shift the AI ecosystem towards equitable and accountable AI.”

AI4ALL is a nonprofit working to increase diversity and inclusion in artificial intelligence: “We believe AI provides a powerful set of tools that everyone should have access to in our fast-changing world. Diversity of voices and lived experiences will unlock AI’s potential to benefit humanity. When people of all identities and backgrounds work together to build AI systems, the results better reflect society at large. Diverse perspectives yield more innovative, human-centered, and ethical products and systems.”

OpenAI seems to have the biggest ambitions and most funding (~2 billion as of 2021): “OpenAI is an AI research and deployment company. Our mission is to ensure that artificial general intelligence benefits all of humanity. OpenAI’s mission is to ensure that AGI-by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome.”

Along with all these institutions being created, universities are also creating groups with similar goals of saving humanity. The University of Oxford has created the Leverhulme Centre for the Future of Intelligence (CFI). Their goal: “Our aim is to bring together the best of human intelligence so that we can make the most of machine intelligence. The rise of powerful AI will be either the best or worst thing ever to happen to humanity. We do not yet know which. The research done by this centry will be crucial to the future of our civilisation and of our species.” The University of Berkeley has created the Center for Human-Compatible AI (CHAI). Their stated mission: “The long-term outcome of AI research seems likely to include machines that are more capable than humans across a wide range of objectives and environments. This raises a problem of control: given that the solutions developed by such systems are intrinsically unpredictable by humans, it may occur that some such solutions result in negative and perhaps irreversible outcomes for humans. CHAI’s goal is to ensure that this eventuality cannot arise, by refocusing AI away from the capability to achieve arbitrary objectives and towards the ability to generate provably beneficial behavior. Because the meaning of beneficial depends on properties of humans, this task inevitably includes elements from the social sciences in addition to AI.”

There are dozens of other groups working on redirecting humanity and AI from these potential doomsday scenarios. Clearly there are a lot of smart people concerned that this is a big problem right now and worth trying to figure out before its too late.

In the following writings, we will discuss where AI technology currently is and whether this is really needed or not.